Instagram has long touted itself as a community-safe, family-friendly platform—but that claim rings hollow for cannabis and psychedelics advocates who’ve watched their educational, legal, and medically backed content get flagged, shadowbanned, or permanently erased. Meanwhile, videos glorifying violence and sexual exploitation flood Explore pages like sponsored content from hell.

This isn’t content moderation. It’s targeted censorship masquerading as public safety.

The Crackdown Continues: Now Psychedelics Are in the Crosshairs

Over the past few weeks, Meta has quietly suspended numerous psychedelics-related accounts, including institutions like the U.C. Berkeley Center for the Science of Psychedelics and community groups like Moms on Mushrooms. Accounts have been banned, reinstated, and banned again—with little explanation, no transparency, and certainly no accountability.

Even when Meta finally admits it “made a mistake,” as it did after banning the Instagram accounts for Psychedelic Science and the Psychedelic Assembly, it offers little comfort. As founder Kat Lakey put it: “I’m relieved, but also nervous about posting anything psychedelic-related right now in case it causes the account to get deleted again.” Translation: Post at your own risk, even if it’s legal, medically relevant, or part of public discourse.

This kind of whiplash enforcement is beyond punitive—it’s a chilling message: If you’re talking about cannabis or psychedelics, you’re never truly safe on Meta’s platforms, even when you're doing everything by the book.

Meta’s Message: Legally Sanctioned Plant Medicine Is Dangerous, But Booty Pics and Brawls Are Fine

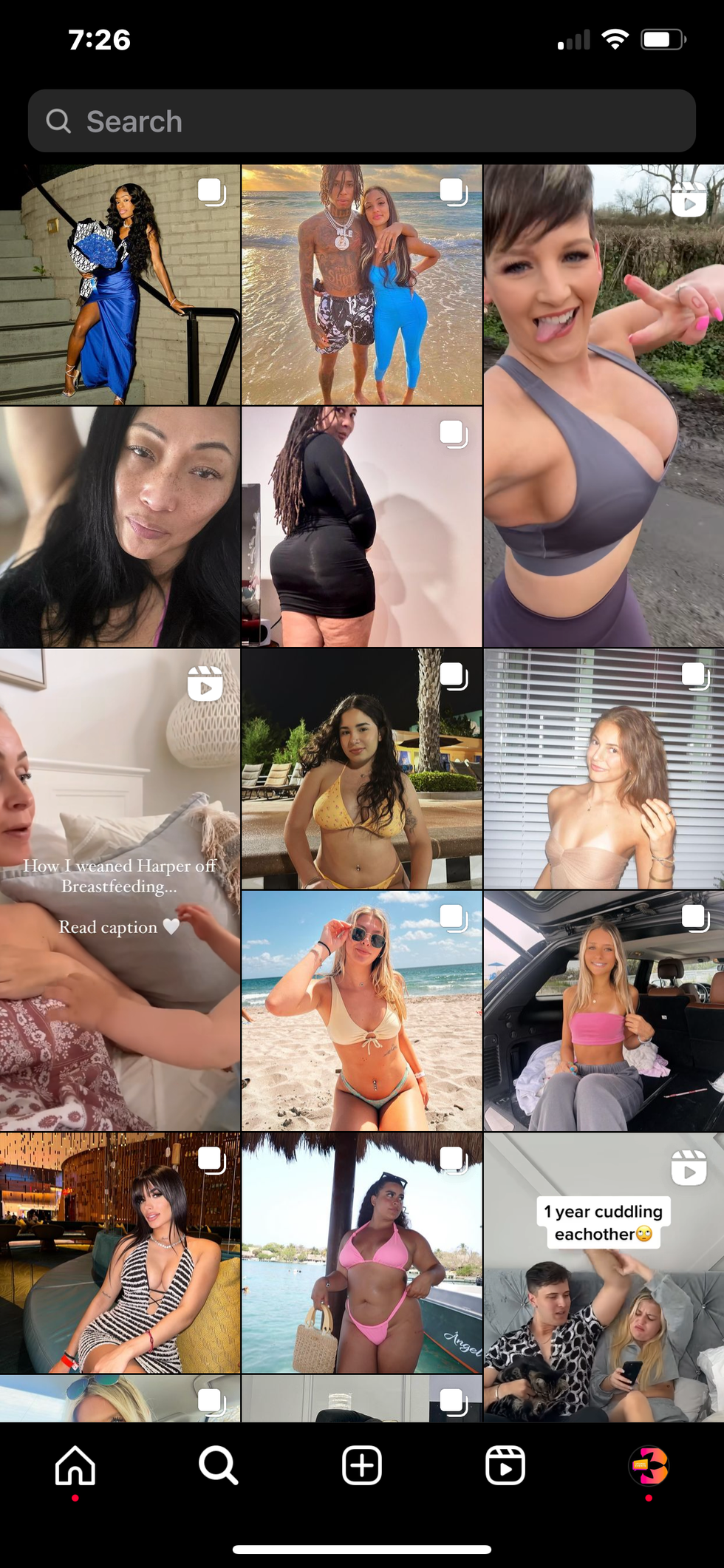

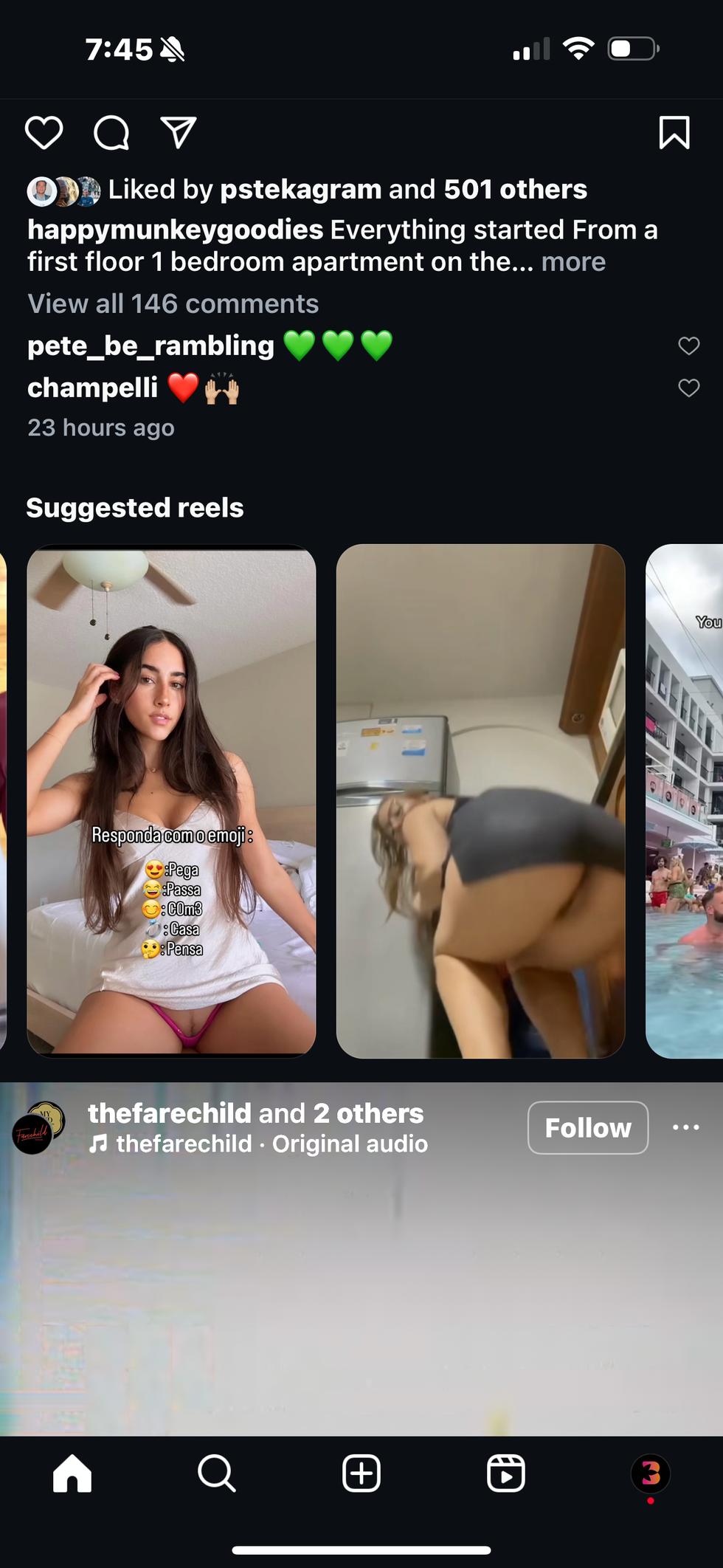

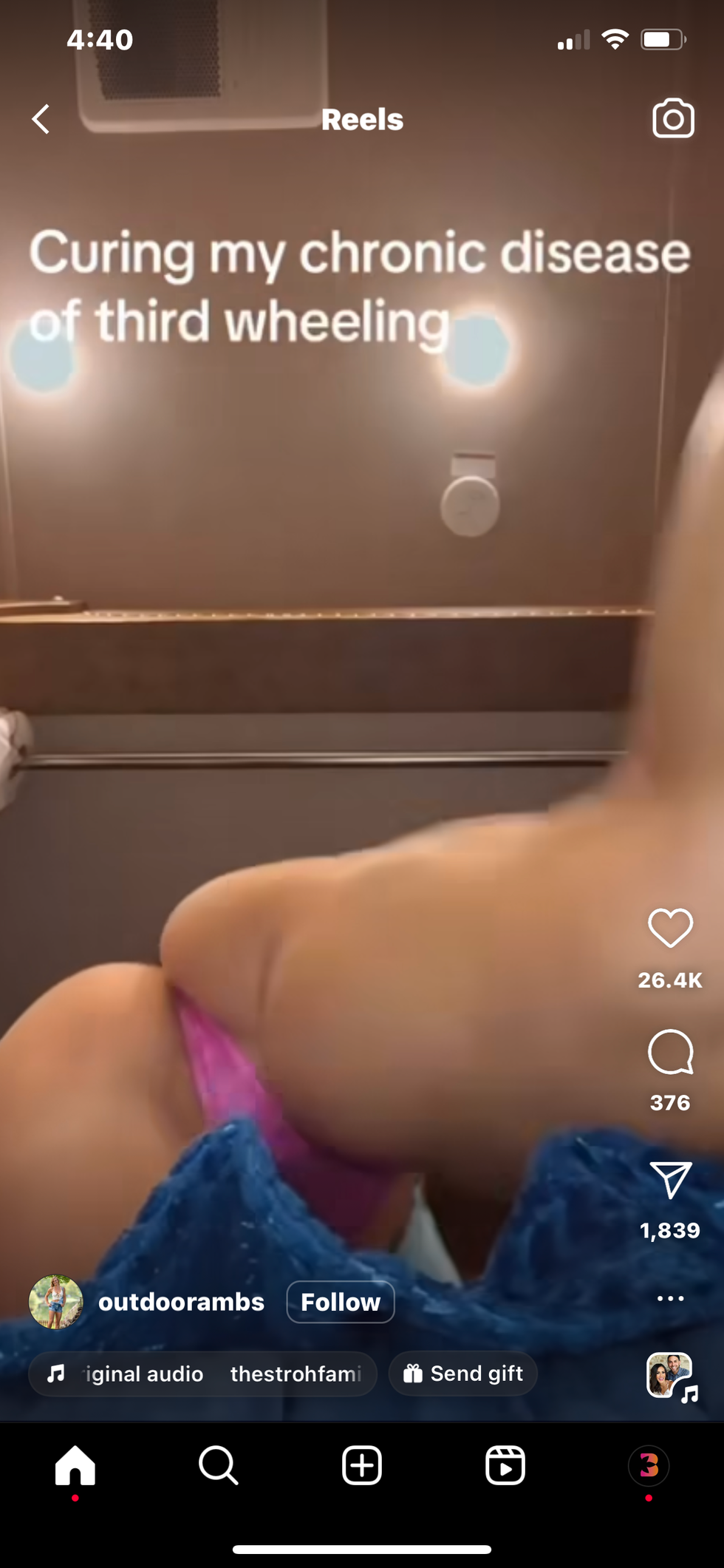

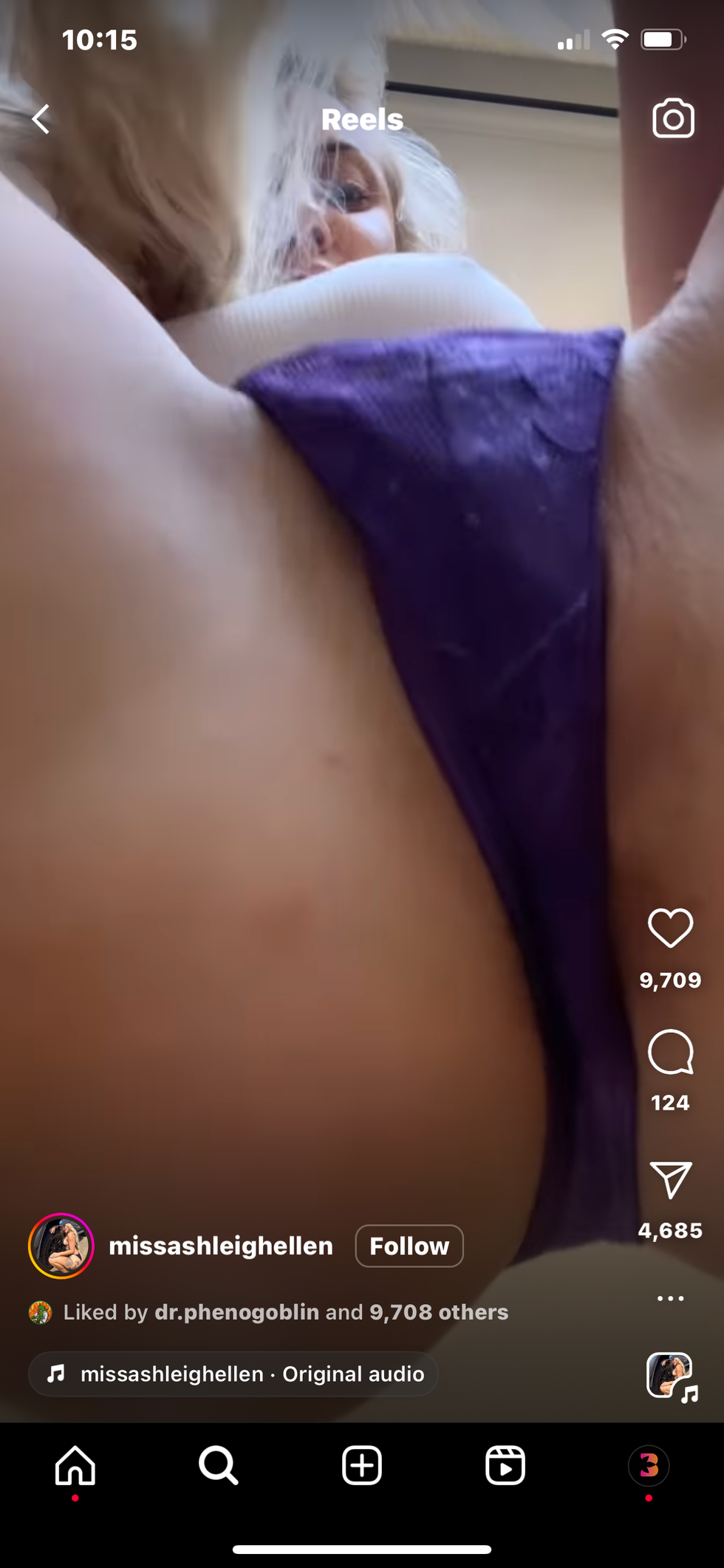

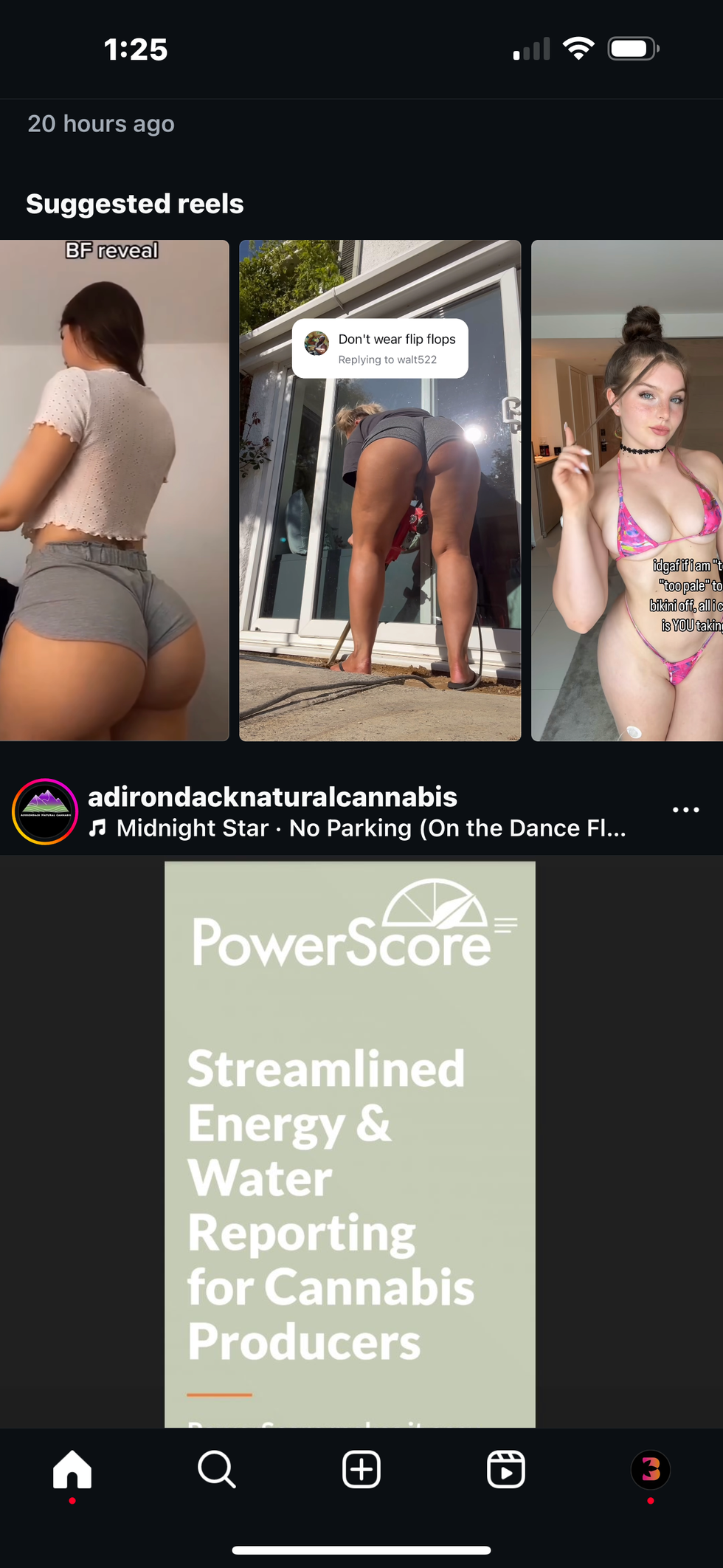

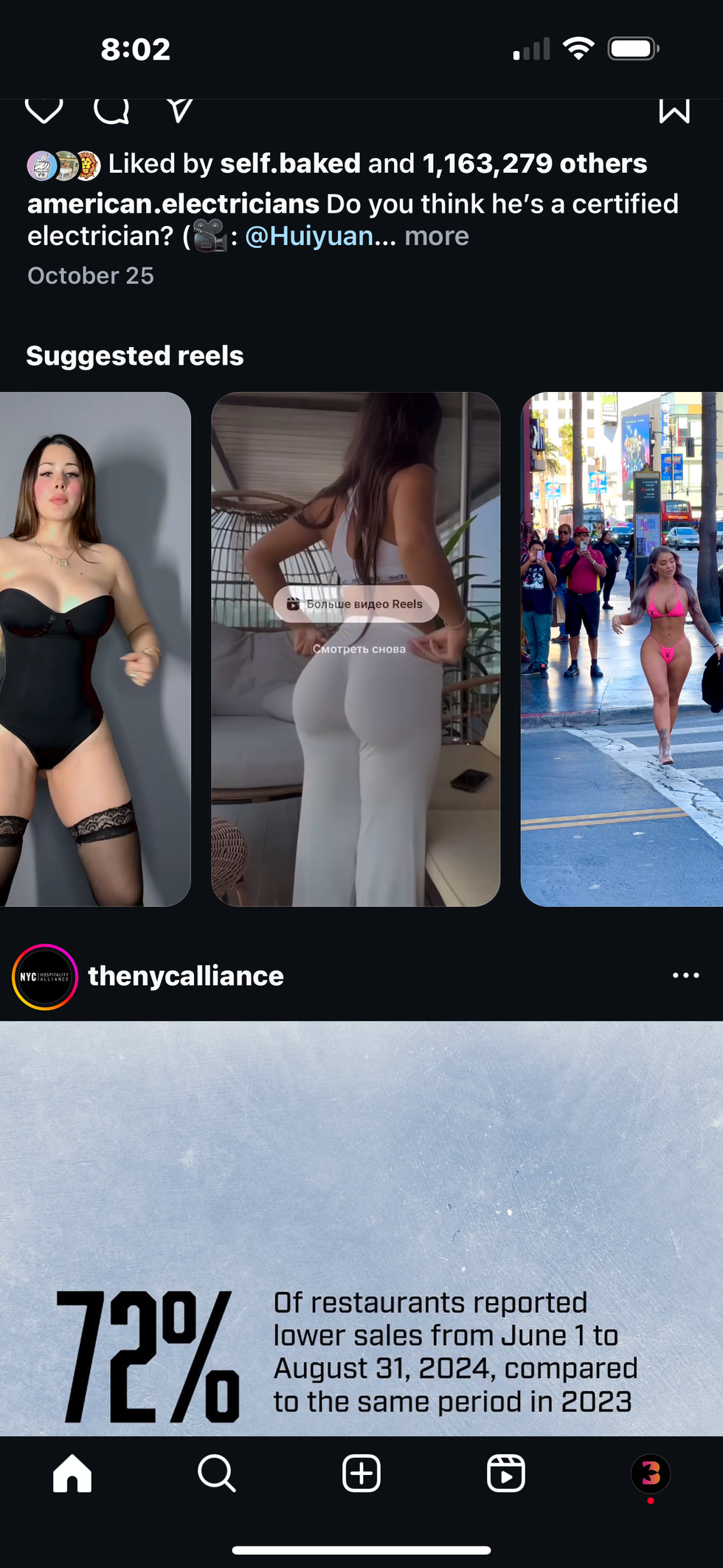

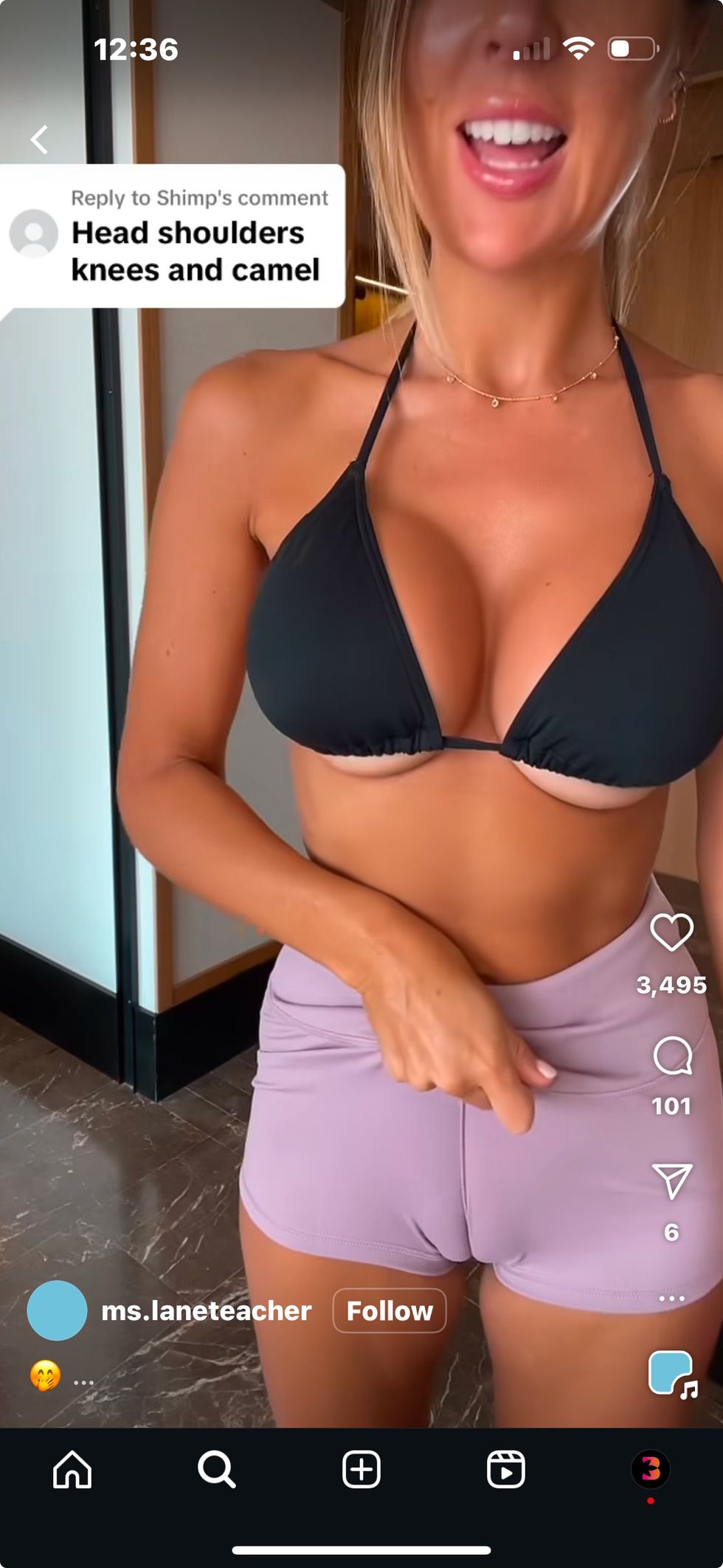

What makes all this so infuriating is not just the censorship—it’s the flagrant double standard. Instagram is perfectly fine pushing half-naked influencers and hyper-violent content to its 2.4 billion users. Its algorithm rewards rage, sex, and spectacle. You’ll never see a “community guideline violation” for an account that posts exploitative thirst traps or fight compilations. But if you post about microdosing for PTSD? Deleted.

The hypocrisy is staggering. Meta claims its cannabis and psychedelics policies exist to “keep the community safe.” Meanwhile, a Wall Street Journal report found that Instagram hosted ads for cocaine and opioids—content that’s not just “unsafe,” but outright illegal.

Let that sink in. Meta profits off clickbait pushing dangerous narcotics, but will permanently ban a mother sharing her experience with psilocybin for postpartum depression.

Let’s be clear—this is not a critique of body positivity. There’s absolutely nothing wrong with people celebrating their bodies or expressing themselves authentically. But the content dominating Instagram’s algorithm isn’t just body-positive—it’s sexually explicit, often skirting the edges of pornography, and in some cases, blatantly crossing the line.

These aren’t empowering posts about self-love; they’re sexually-explicit content engineered for clicks, engagement, and sexual gratification, often pushed to young users via Explore feeds and recommendation algorithms. The fact that this kind of material gets algorithmic amplification while legal, educational cannabis or psychedelics content gets buried or banned shows exactly where Instagram’s priorities lie.

Real Harm, Real Costs

Instagram’s erratic, opaque moderation isn’t just an inconvenience—it’s a serious economic and social liability for small businesses, researchers, and advocacy organizations. For cannabis dispensaries and psychedelic educators, Instagram is often the single most important channel for reaching adult consumers. When that gets shut down, it’s not just a hit to engagement—it’s a hit to livelihoods.

Gina Vensel, co-founder of the Plant Media Project, has already collected nearly 60 cases of psychedelic accounts being removed or shadowbanned—most with vague, copy-paste justifications that cite “community guidelines” but explain nothing.

This level of inconsistency—where enforcement is algorithmic, appeals are arbitrary, and punishment is more severe for cannabis content than softcore porn or digital street fights—makes one thing crystal clear: Meta’s concern isn’t user safety. It’s optics. And cannabis and psychedelics are easier to scapegoat than sex or violence, which drive far more engagement.

The Real Problem: Algorithmic Idiocy and Cultural Lag

At the core of this chaos is Meta’s lazy reliance on AI-powered moderation. These bots can’t tell the difference between a research abstract on psilocybin and a crack dealer’s come-up reel. As a result, the system overcorrects, targeting legitimate voices while letting the algorithm-juicing shock content slide right through.

Worse, the policies underpinning this enforcement are rooted in outdated, prohibition-era stigmas that haven’t caught up to the laws or the science. Cannabis is legal for medical or recreational use in dozens of states and countries. Psychedelics are being studied—and in some places decriminalized—for their therapeutic potential. But Meta still treats all of it like black-market contraband.

Time to Cut the Crap

Instagram can’t have it both ways. You can’t claim to be a platform for community, culture, and education while silencing some of the most impactful conversations in health and science—just because they don’t fit your ad-safe narrative.

If Meta is serious about building trust, it needs to start with three things:

- Transparency – Clearer guidelines and explanations when accounts are flagged.

- Consistency – If you’re going to police cannabis and psychedelics, you better be policing sex and violence with the same energy.

- Human Oversight – AI isn’t cutting it. Content with medical, scientific, or cultural relevance needs real review—not bot-triggered bans.

Until then, cannabis and psychedelics will remain second-class citizens in Meta’s digital empire—legal offline, but treated like contraband online. And that’s not just hypocritical. It’s dangerous.

How to Store Magic Mushrooms

How to Store Magic Mushrooms How to Store Magic Mushrooms

How to Store Magic Mushrooms How to Store Magic Mushrooms

How to Store Magic Mushrooms How to Store Magic Mushrooms

How to Store Magic Mushrooms How to Store Magic Mushrooms

How to Store Magic Mushrooms

How to Make a Cannagar Without a Mold: A Comprehensive Guide - The Bluntness

Photo by

How to Make a Cannagar Without a Mold: A Comprehensive Guide - The Bluntness

Photo by

Can Drug Dogs Smell Edibles? - The Bluntness

Photo by

Can Drug Dogs Smell Edibles? - The Bluntness

Photo by  Can Drug Dogs Smell Edibles? - The Bluntness

Photo by

Can Drug Dogs Smell Edibles? - The Bluntness

Photo by

The Travel Agency Lands in SoHo - The Bluntness

The Travel Agency Lands in SoHo - The Bluntness The Travel Agency Lands in SoHo - The Bluntness

The Travel Agency Lands in SoHo - The Bluntness The Travel Agency Lands in SoHo - The Bluntness

The Travel Agency Lands in SoHo - The Bluntness The Travel Agency Lands in SoHo - The Bluntness

The Travel Agency Lands in SoHo - The Bluntness